Return to Home

Methodology Paper

Introduction:

At this very moment, the largest global threat is pandemic. With COVID-19 rapidly spreading around the globe, many national governments are beginning to put more weight to the threat of epidemics. One of the main dangers with epidemics is our lack of knowledge of the target virus. Information about the virus is integral to combatting it. This is most important towards the beginning of the virus. With sufficient knowledge, we would be able to effectively treat patients, combat the spread, and even predict a virus’s course. In this day and age, a virus can leap around the globe with ease, due to our advanced (and readily available) modes of transportation. For instance, take a look at COVID-19’s spread from Wuhan, China, to the rest of the globe. The time it took for this virus to travel to each corner of the world is truly disturbing. However, it seems that we have this epidemic relatively under control. What about the next one? Are we prepared for the next deadly outbreak? It could be on the horizon.

Are we teaching technology, or is technology teaching us?

One asset that we do have in this battle against epidemic is data– Big Data. The problem is the analysis and implementation of said data. One tool that we have in our arsenal is the use of neural networks. By feeding a computer large quantities of data, we can essentially “teach” the computer to see patterns that are unable to be seen by the human eye. These neural networks are often designed to think similarly to a human brain, while being able to perform calculations that a human brain cannot. This method of pattern recognition is integral to the field of data science. Data is essentially useless; the conclusions that are come to from data is what is truly useful. This technique continues to develop for more advanced applications. It also happens work hand-in-hand with a variety of other data science methods. For example, the idea of linear regression is very applicable with neural networks. The networks can see these patterns and develop a linear-scaled model, which displays (and quantifies) the relationship between two separate variables. One data scientist states “Linear regression is one of the most simple examples of machine learning algorithms we can think of,” (Rocca 1). A neural network can be designed to take certain data points as inputs, and to find a function that accurately models the inputted data. Sometimes, this relationship between the variables is not linear. One approach to this problem could be to find the function’s derivative, and discover a linear relationship that way. Overall, these two data science methods are very complementary. Together, they can be used to answer questions that we haven’t been able to answer until now.

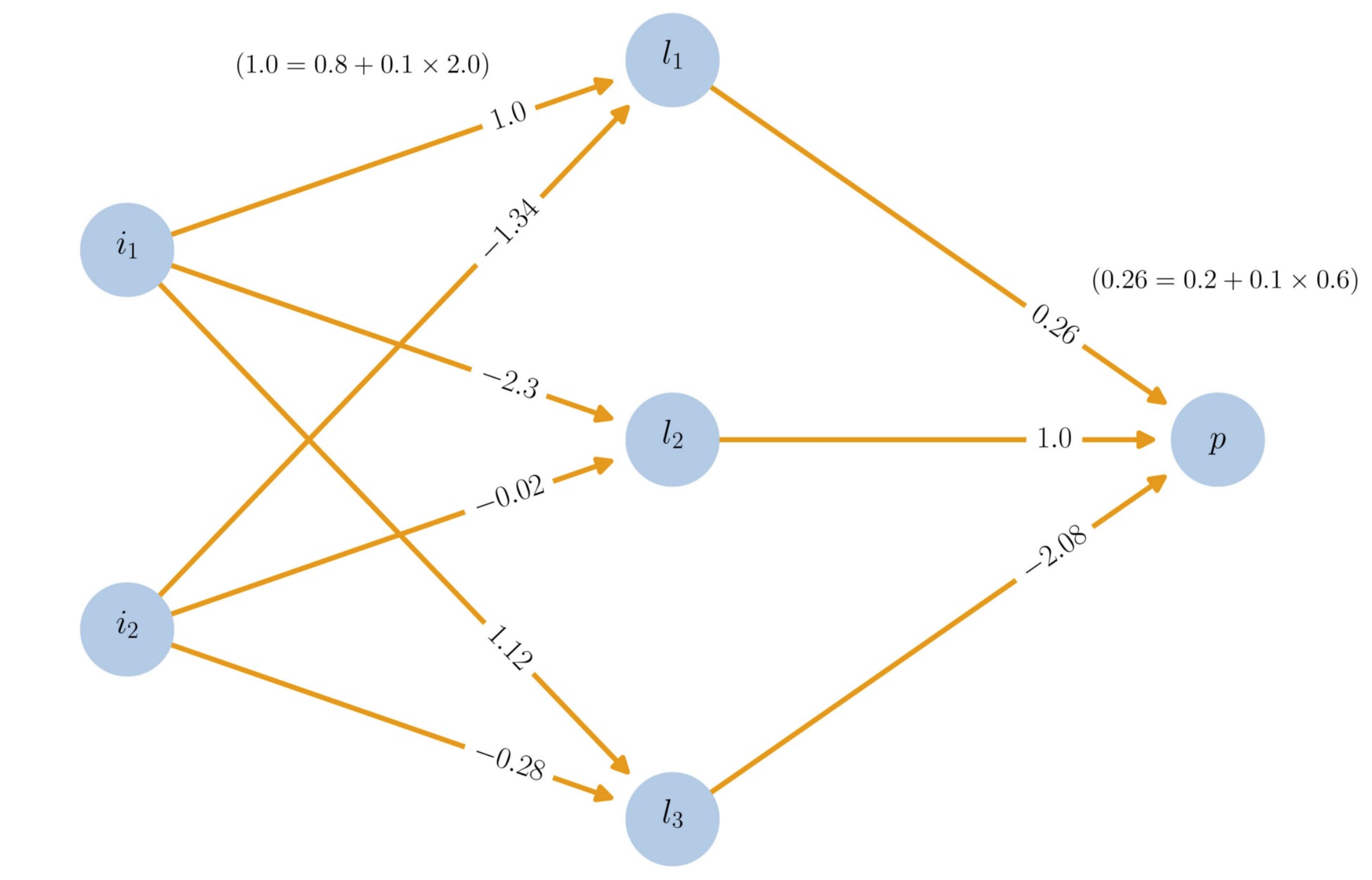

Linear regression can become much more complex when more inputs are involved. This is called multilinear regression. The function f(x)=ax + b (where a and b are unspecified parameters) then becomes f(x,y)=ax + ay + b. Consider the concept of big data: imagine the calculations involved in using linear regression when there are countless inputs. It would be much easier for the neural network to perform these calculations to find a linear model. Attached below is an example of a complicated, multi-linear regression model that utilizes neural networking (Rocco 1). In this model, “i” are the inputs and “p” is the output. The numbers are meant to symbolize the “optimization” of the neural network, which essentially is fitting the model better to the data, to reduce the margin of error in the output.

Another important aspect of these methods’ cooperation is the interpretability of the outputted data. “Machine Learning models are going to assist humans for some (possibly important) tasks (in health, finance, driving…) and we sometimes want to be able to understand how the results returned by the models are obtained,” says Joseph Rocca (Rocca 1). It’s one thing to make the computer solve a problem; it’s another thing to learn how the computer solved the problem. One TED-ED talk focuses on the idea of learning from machines. Titled “How to manage your time more effectively (according to machines)”, this talk discusses how to use computer science methods in your own daily life to become a more efficient person (Christian 1). As we advance our technology, we must use our technology to advance us as well.

The question “are we prepared for the next outbreak?” is an exploratory one. It aims to explore the future of data science’s application to battling epidemics. This is a worldwide problem as well as a worldwide effort. Computer scientists are developing neural networks across the globe; this has become a global and collaborative experience. This is the way it should be; we are all in this together. My hope is that one day, we will be able to learn enough from technology to see patterns where we historically could not (with the human eye). The combined power of these two data science methods is quite exciting. It is very apparent how this is only the early stages of artificial intelligence. It will continue to develop alongside us, learning from us as well as teaching us.

There is one notable gap in the literature on these topics. There are very little resources designated to teaching the unexperienced how to begin working with machine learning. If this science is to develop, it must be easily accessible to everyone, otherwise the next Einstein may end up coding the next Halo game instead of a cancer-curing neural network. This technology presents such power and ability, and we cannot take that for granted. There also needs to be a push for a wider spread of application of this technology to our everyday lives. This may create more motivation for people to pursue the root of an aritifical intelligence engineer. This is the field of the future. This is our best chance to combat unforeseen future disasters.

Sources:

Moné, Lesa. “The Era of Quantum Computing and Big Data Analytics.” Enterprise Architecture Management, LeanIX GmbH, 18 Jan. 2019, www.leanix.net/en/blog/quantum-computing-and-big-data-analytics.

Gill, Navdeeo Singh. “Artificial Neural Network Applications and Algorithms.” XenonStack, XenonStack, 24 Mar. 2020, www.xenonstack.com/blog/artificial-neural-network-applications/.

Rocca, Joseph. “A Gentle Journey from Linear Regression to Neural Networks.” Medium, Towards Data Science, 13 July 2019, towardsdatascience.com/a-gentle-journey-from-linear-regression-to-neural-networks-68881590760e

Christian, Brian. (2018, January 2). Brian Christian: How to manage your time more effectively (according to machines) [Video file]. Retrieved from https://www.youtube.com/watch?v=iDbdXTMnOmE&t=3s